My baptism into the world of computers occurred the Saturday my dad brought home an Apple IIc when I was a kid. He was always fascinated by electronic gadgets and this seemed to be the latest device that would sit in a room (and eventually gather dust) next to his ham radio and photography equipment. The damn thing didn’t do much, but it still felt magical every time I had the opportunity to play games on it; that funky beige box with a square hunk of plastic on a leash moving an arrow around a screen. At the time, it didn’t feel like anything more than a fancy toy to me. I certainly had no idea that this interaction would foreshadow my own love-hate relationship with the entire technology industry.

Years later, I turned into an Apple acolyte while working in the IT department of an East Coast university. I was an MCSE and had become thoroughly exhausted battling Windows drivers and the BSOD circle of Hell. After I moved to Unix administration, the first version of OS X felt like a relief after long days of command line combat. While it had an underlying CLI that worked similarly to the Unix platforms I was used to, Apple provided a more stable desktop that was painless for me to use. Unlike Windows, the hardware and the OS generally seemed to work. Sure, I didn’t have all the software packages I needed, but virtualization solved that problem. While I lost some of my expertise in building and fixing personal computers, I no longer seemed to have any interest in tinkering with them anymore. During a mostly effortless, decade-long relationship with Apple, I would jokingly tell friends, “Buy a Mac, it will make you stupid.” I became an evangelist, “Technology is just a tool. Don’t love the hammer, love what you can make with it.”

But as often happens in romances, this one has hit a rocky patch. Lately, trusting the quality of Apple products feels akin to believing in Santa Claus, honest politicians or a truly benevolent God.

The relationship started to flounder about 6 months ago, when I was unlucky enough to have the hard drive fail in my 2013 27″ iMac. It had been a workhorse, but the warranty had expired a few months before and I was in the unwelcome position of choosing between options ranging from bad to worse. I could try to replace it myself, but after looking at the IFIXIT teardown, that idea was quickly nixed. The remaining choices were to pay Apple or buy a new system between release cycles. After running the cost/benefit analysis in my head the last option made the most sense, since the warranties on both of my laptops were also expired. With some trepidation, I purchased the newly redesigned 2016 MacBook Pro with an external LG monitor. And here’s where my struggles began.

Within a few days of setting up the laptop, I noticed some flakiness with USB-C connections. Sometimes external hard drives would drop after the system was idle. In clamshell mode, I often couldn’t get the external monitor to wake from sleep. I researched the issues online, making recommended tweaks to the configuration. I upgraded the firmware on my LG monitor, even though the older MacBook Pro provided by my workplace never had any issues. Then I did what any good technology disciple would do and opened a case with Apple, dutifully sending in my diagnostic data, trusting that Support would cradle me in their beneficence. The case was escalated to Engineering with confirmation that it was a known timing issue to be addressed by a future software or firmware patch. In the meantime, I refrained from using the laptop in clamshell mode and got used to resetting the monitor connection by unplugging it or rebooting the laptop. I also bought a dock that solved my problems with external hard drives. Then I waited.

Like the rest of the faithful, I watched last week’s WWDC with bated breath. I was euphoric after seeing the new iMac Pro and already imagining my next purchase. But a few days later, after having to reset my external monitor connection four times in a 12-hour period, I emailed the Apple Support person I had been working with, Steve*, for a status update on the fix for the issue with my MacBook Pro. I happened to be in Whole Foods when he called me back and the poetic justice of arguing over a $3000.00 laptop in the overpriced organic produce aisle wasn’t lost on me.

Support Steve: Engineering is still working on it.

Mrs. Y.: What does that mean? Do you know how long this case has been open? I think dinosaurs still roamed the earth.

Support Steve: The case is still open with Engineering.

Mrs. Y: If you’re releasing a new MacBook Pro, why can’t you fix mine? Is this a known issue with the new laptops as well? If not, can’t you just replace mine?

Support Steve: That isn’t an option.

Mrs. Y: Please escalate this case to a supervisor.

Support Steve: There’s no supervisor to escalate to. Escalation goes to engineering, I only have a supervisor for administrative purposes.

Mrs. Y: I want to escalate this case to the person you report to, because I’m not happy with how it’s being handled.

Then the line went dead.

While Support Steve never raised his voice, for the record, a polite asshole is still an asshole.

This experience has done more than sour me on Apple, it’s left me in a full-fledged, technology-induced existential crisis. How could this happen to me, one of the faithful? I follow all the rules, I always run supported configurations and patch my systems. I buy Apple Care!

I even considered going the Hackintosh route. But I don’t know if I can go back to futzing with hardware, praying that a software update won’t make my system unusable. And Apple’s slick hardware still maintains an unnatural, cult-like hold over me.

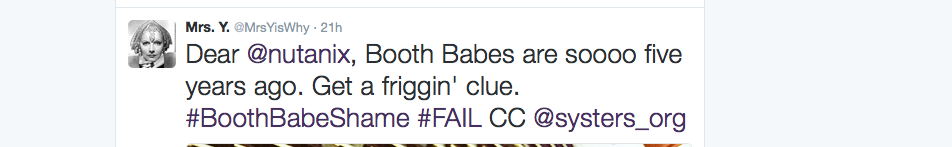

So, like a disaffected Catholic, I continue to hold out hope that Apple will restore my faith, because it’s supposed to “Think Different” and be the billion-dollar company that cares about its customers. But I can’t even get anyone to respond on the Apple Support Twitter account, leaving me in despair over why my pleas fall on deaf ears.

*I’m not making this up, the Apple Support person’s name is actually “Steve.” But maybe that’s what they call all their support people as some kind of homage to the co-founder.